Bayesian Statistics¶

The process of Bayesian data analysis can be idealized by dividing it into the following three steps (from Gelman et al. (2013)) :

Setting up a full probability model---a joint probability distribution for all observable and unobservable quantities in a problem. The model should be consistent with knowledge about the underlying scientific problem and the data collection process.

Conditioning on observed data: calculating and interpreting the appropriate posterior distribution—the conditional probability distribution of the unobserved quantities of ultimate interest, given the observed data.

Evaluating the fit of the model and the implications of the resulting posterior distribution: how well does the model fit the data, are the substantive conclusions reasonable, and how sensitive are the results to the modeling assumptions in step 1? In response, one can alter or expand the model and repeat the three steps.

Notation¶

- θ : unobservable parameters that we want to infer

- : observed data

- : unobserved but potentially observable quantity

- : vector quantity always assumed to be a column vector

- : probability density function or discrete probability (from context)

- : conditional probability

Bayes’ Theorem¶

In order to make probability statements about θ given , we must build a model that provides a joint probability distribution for θ and . We can write the joint distribution as a product of two distributions:

- the prior and

- the sampling distribution . For fixed we call this the likelihood

Assuming we are given instead, we could write

Putting these together, we arrive at Bayes’ Theorem:

Typically, computing in (3), called the evidence, is intractable. However, it is a constant. Consequently, we often write Bayes’ Theorem as a proportional relation:

Example from Genetics¶

This example is also from Gelman et al. (2013).

Biological human males have one X-chromosome and one Y-chromosome, whereas females have two X-chromosomes, each chromosome being inherited from one parent. Hemophilia is a disease that exhibits X-chromosome-linked recessive inheritance, meaning that a male who inherits the gene that causes the disease on the X-chromosome is affected, whereas a female carrying the gene on only one of her two X-chromosomes is not affected. The disease is generally fatal for women who inherit two such genes, and this is rare, since the frequency of occurrence of the gene is low in human populations.

Prior distribution: Consider a woman who has an affected brother, which implies that her mother must be a carrier of the hemophilia gene with one “good” and one “bad” hemophilia gene. Her father is not affected; thus the woman herself has a fifty-fifty chance of having the gene. Therefore, the woman is either a carrier of the gene or not . The prior distribution for the unknown θ is then

Data model and likelihood: The data will be the the status of the woman’s sons. Let or 0 denote an affected or unaffected son, respectively. Suppose she has two sons, neither of whom is affected. Assume that there is no covariance between the sons’ conditions. The likelihood is then

Posterior distribution: We can now use Bayes’ Theorem in the form (3) to find the posterior probability that the woman has the gene. Let .

Solution to Exercise 1 #

Updating Our Knowledge: Assume we find a long-lost third son that also does not carry the gene. We can use the the posterior from the previous analysis as the prior for our new analysis with the third son included.

Solution to Exercise 2 #

Building Bayesian Models with Probabilistic Programming Languages¶

Let’s switch over from genetics to astronomy and learn how to infer the value of the Hubble constant.

I’ve prepared some data at h_z_measurements.feather.

First, lets import the packages we’ll need for this section.

import jax

import jax.numpy as jnp

import matplotlib.pyplot as plt

import arviz as az

import numpyro

import pandas as pd

from numpyro import distributions as dist

from numpyro import inferdf = pd.read_feather("h_z_measurements.feather")

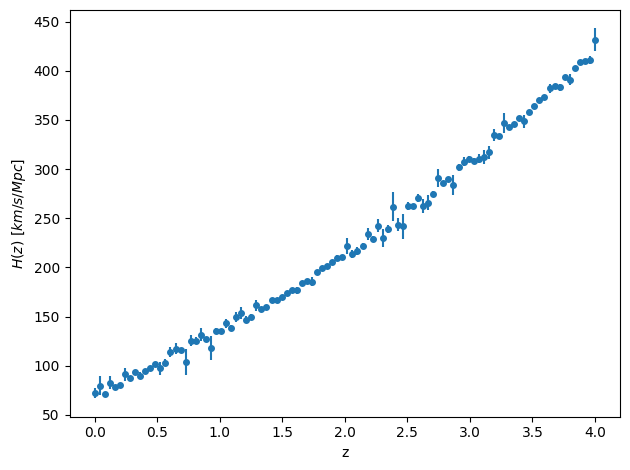

df_ = plt.errorbar(

df["z"], df["H_z"], yerr=df["H_z_err"], fmt="o", markersize=4

)

plt.xlabel("z")

plt.ylabel(r"$H(z)\ \left[km / s/Mpc\right]$")

plt.tight_layout()

This is inspired by the recent DESI release. We could of course just look at , so this data is overkill. We will expand on this model later to infer other parameters.

To build our model, we need to gather all the information we have about the Hubble parameter as a function of redshift. The Friedmann equations (with some extra terms we’ll talk about later) lead to

To start with, we’ll assume all parameters take their values and infer just on .

Our data are the values observed at the corresponding redshifts. Call them

The data looks to have some random noise scattered around a trend. We’ll choose a Gaussian likelihood to account for this.

- We need a forward model for our expected value that is a function of redshift and . Let’s create that.

def hz_forward(z, h0=67.0, omega_matter=0.3, omega_de=0.7, omega_rad=5.5e-5, w0=-1.0, wa=0.0):

"""Hubble parameter as function of redshift. Default parameters are LCDM."""

a_inv = 1+z

return h0 * jnp.sqrt(

omega_matter * (a_inv) ** 3

+ omega_rad * (a_inv) ** 4

+ omega_de * (a_inv) ** (3 * (1 + w0 + wa)) * jnp.exp(-3 * wa * (z / (a_inv)))

)- We also need to quantify our prior beliefs about . Let’s assume very wide priors and say that we believe with equal probability that can be anywhere from 50 to 100.

- Putting everything together in our notation from earlier,

And finally,

Note that the product comes about because each data point is independent.

How would we implement this in code?¶

This is where a probabilistic (PPL) comes in. A PPL usually has the following capabilities:

- Stochastic primitives and distributions as first-class citizens

- Model building frameworks

- Inference routines that can consume the models and automatically adjust/tune themselves

What PPLs are out there?¶

There are many! The ones that are most popular in the Python ecosystem are

Each has their own benefits and drawbacks. Today, we’ll focus on NumPyro. Let’s see how to implement our Hubble parameter model in NumPyro.

NumPyro Basics¶

NumPyro is a PPL built on JAX. It comes with a large selection of distributions, inference routines, and model building tools.

NumPyromodels are simple Python functions.- Within the function, we can call

numpyro.distributions.<some-distribution>for each parameter we need to sample. - For our likelihood, we can also use a built-in distribution. If we tell

NumPyrowe have observed data, it will automatically calculate the probability for that data given the likelihood.

The easiest way to see this is with an example. Lets fit a simple Gaussian.

Start by creating some data.

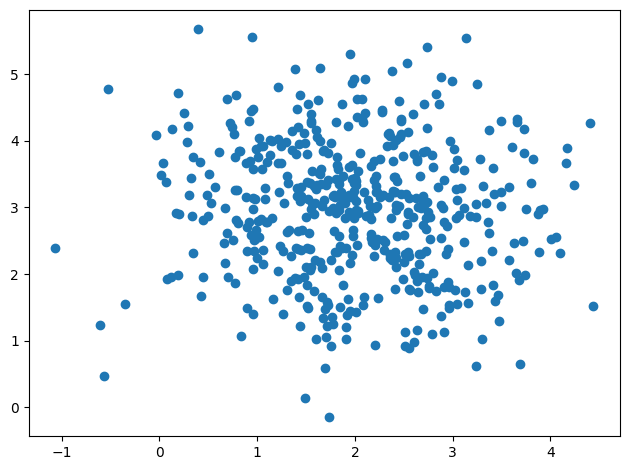

key = jax.random.key(117)

data = jax.random.multivariate_normal(

key, jnp.array([2, 3]), cov=jnp.array([[1, 0], [0, 1]]), shape=(500,)

)WARNING:2025-06-17 15:31:05,833:jax._src.xla_bridge:791: An NVIDIA GPU may be present on this machine, but a CUDA-enabled jaxlib is not installed. Falling back to cpu.

_ = plt.scatter(*data.T)

plt.tight_layout()

Now, we’ll build the NumPyro model. As arguments, it needs to take the input data.

def simple_2d_gaussian(data=None):

# Get the mean vector

mu = numpyro.sample("mu", dist.Uniform(-5, 5), sample_shape=(2,))

# We'll assume a diagonal covariance

cov_diag = numpyro.sample("cov_diag", dist.Uniform(-10, 10), sample_shape=(2,))

# Arbitrary transformations are fine!

# "Deterministic" stores them in the trace

cov = numpyro.deterministic("cov_mat", jnp.diag(cov_diag))

# The data are independent

with numpyro.plate("data", data.shape[0]):

# Get the likelihood

numpyro.sample(

"loglike", dist.MultivariateNormal(loc=mu, covariance_matrix=cov), obs=data

)This creates a model that we can visualize as a probabilistic graphical model or more specifically a Bayesian network.

numpyro.render_model(simple_2d_gaussian, model_args=(data,), render_distributions=True)Now, we can pass off our model to a routine that will approximate the posterior. Let’s first, however, take a detour and let you build your own NumPyro model.

Solution to Exercise 3 #

def hz_model(z, hz, hz_err):

h0 = numpyro.sample("h0", dist.Uniform(50,100))

with numpyro.plate("data", hz.shape[0]):

numpyro.sample("loglike", dist.Normal(hz_forward(z, h0=h0), hz_err), obs=hz)

numpyro.render_model(hz_model, model_args=(df['z'].to_numpy(), df['H_z'].to_numpy(), df['H_z_err'].to_numpy()), render_distributions=True)Approximating Posterior Distributions¶

Now that we have our model built, we need a way to find the posterior distribution of the parameters of interest. In very rare circumstances, our posterior has a closed form expression (see conjugate priors). Most of the time, we need numerical approximations to learn about the posterior.

One of, if not the most, ubiquitous algorithms for this purpose is Markov Chain Monte Carlo

Markov Chain Monte Carlo (MCMC)¶

This algorithm allows us to draw samples from a posterior when we do not know or cannot calculate the posterior distribution from first principles. The name comes from the merging of two statistical ideas: Markov Chains and Monte Carlo processes.

- Markov Chains: a stochastic process describing a sequence of possible events in which the probability of each event depends only on the state attained in the previous event.

- Monte Carlo methods: computational algorithms that rely on repeated random sampling to obtain numerical results

MCMC works in the following way:

- The chain of posterior samples is at

- A new location is drawn from a proposal distribution , which we’ll use .

- The ratio of the target probability density at the proposed location to the current location is calculated, .

- If the jump is accepted, if it’s accepted with a probability of α. If a jump is rejected the current sample is repeated in the chain.

See https://

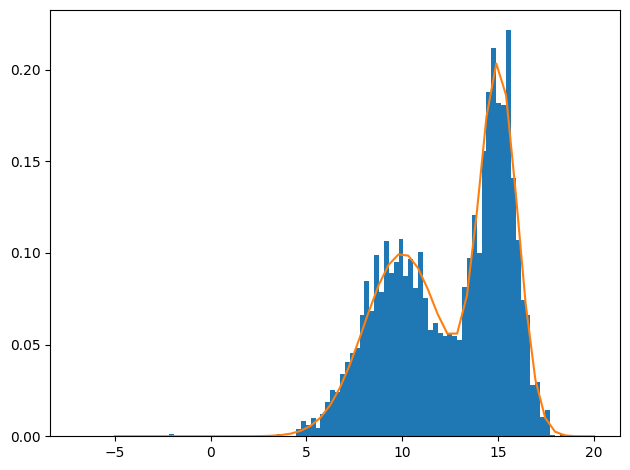

We’ll create a simple distribution by hand and then draw samples from it.

def pdf(x, mu=10, sigma=2.0):

"Gaussian PDF"

return (

1 / jnp.sqrt(2 * jnp.pi * sigma**2) * jnp.exp(-((x - mu) ** 2) / (2 * sigma**2))

)

def p(x):

return 0.5 * (pdf(x, mu=10, sigma=2.0) + pdf(x, mu=15, sigma=1))from scipy.stats import norm

from tqdm import tqdm

key , _= jax.random.split(key)

x0 = -7.0

x = x0

p_current = p(x0)

chain = [x]

probs = [p_current]

niter = 10000

sigma_jump = 5.

for i in tqdm(range(niter)):

xp = norm.rvs(loc=x, scale=sigma_jump)

p_p = p(xp)

α = p_p/p_current

u = jax.random.uniform(key)

key, _ = jax.random.split(key)

accepted = u < α

if accepted:

x = xp

p_current = p_p

chain.append(x)

probs.append(p_current)100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 10000/10000 [00:03<00:00, 3160.57it/s]

plt.hist(chain, bins=100, density=True)

_ = plt.plot(jnp.linspace(-5,20), p(jnp.linspace(-5,20)))

plt.tight_layout()

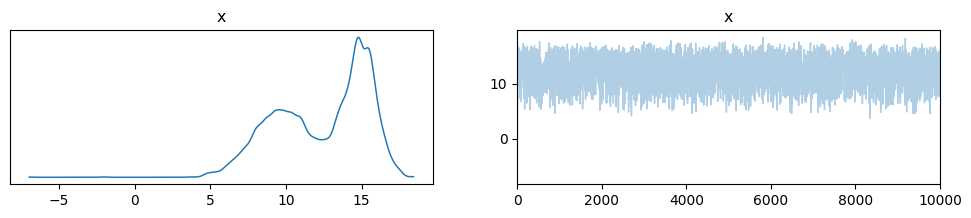

We can use ArviZ to explore our posterior more. Here’s an example.

simple_inf_data = az.from_dict({"x":chain})

_ = az.plot_trace(simple_inf_data)

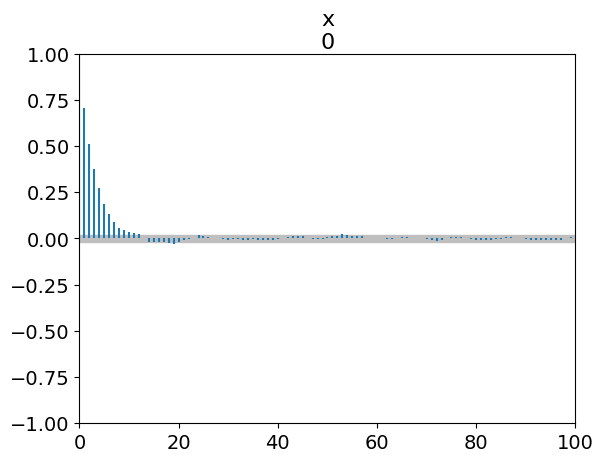

_ = az.plot_autocorr(simple_inf_data)

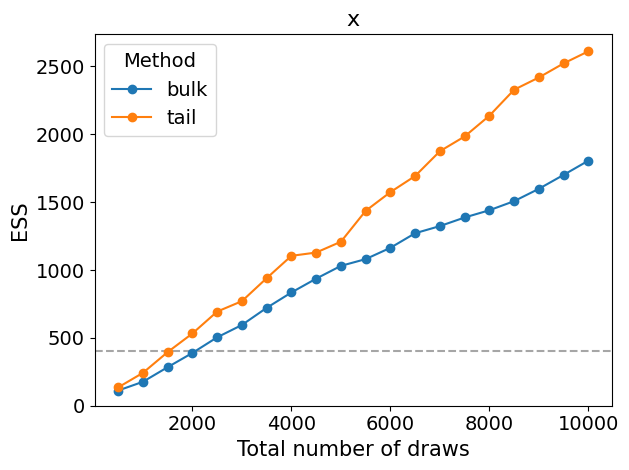

_ = az.plot_ess(simple_inf_data, kind="evolution")

plt.tight_layout()

Other Algorithms Beyond MCMC¶

MCMC works well, and often it is all you need. However, there are a plethora of sampling algorithms to explore. Here are a few you may encounter.

Parallel Tempering (Replica Exchange Monte Carlo)¶

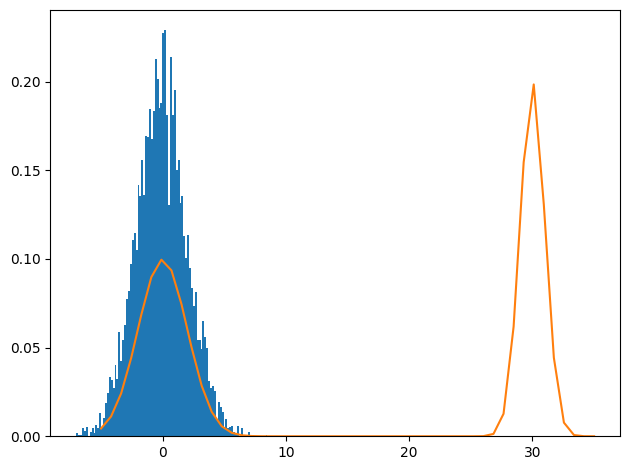

Our test distribution above was bimodal, but there was enough overlap for the sampler to explore both modalities. What would happen as we increase the bimodality?

def p_big_bimodal(x):

return 0.5 * (pdf(x, mu=0, sigma=2.0) + pdf(x, mu=30, sigma=1))

key , _= jax.random.split(key)

x0 = -7.0

x = x0

p_current = p_big_bimodal(x0)

chain = [x]

probs = [p_current]

niter = 10000

sigma_jump = 5.

for i in tqdm(range(niter)):

xp = norm.rvs(loc=x, scale=sigma_jump)

p_p = p_big_bimodal(xp)

α = p_p/p_current

u = jax.random.uniform(key)

key, _ = jax.random.split(key)

accepted = u < α

if accepted:

x = xp

p_current = p_p

chain.append(x)

probs.append(p_current)100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 10000/10000 [00:02<00:00, 3736.71it/s]

plt.hist(chain, bins=100, density=True)

_ = plt.plot(jnp.linspace(-5,35), p_big_bimodal(jnp.linspace(-5,35)))

plt.tight_layout()

The sampler never explores the other modality. How can parallel tempering fix that?

- “Temper” the log density:

- High temperatures flatten the posterior surface, allowing our chains to explore more space

- Run many temperatures in parallel and propose swaps between them

Using Gradient Information¶

What if instead of a random walk, we wanted to guide our sampler? This is where Hamiltonian Monte Carlo (HMC) shines.

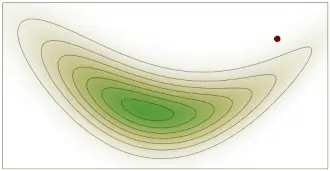

HMC essentially sets up a Hamiltonian for our probabilistic model and solves Hamilton’s equations. As such, we now have equations of motion for our sampler.

This helps tremendously with posteriors that have strange geometries or high dimension.

The general algorithm is:

- Define potential energy to be

- Sample kinetic energy from a proposal distribution

- Solve Hamilton’s equations numerically with a symplectic integrator for steps of stepsize ε

- Accept or reject the final position with a Metropolis step as in standard MCMC

- Repeat 2--4

An alternative to HMC is the No-U-Turn Sampler (NUTS) Hoffman & Gelman, 2011. NUTS aims to fix some issues with hyperparameter tuning in HMC, and it’s extremely prevalent in Bayesian inference.

NUTS removes the need to carefully tune the number of steps : it is an adaptive algorithm that chooses the best to properly explore the posterior. It follows the same algorithm as HMC, but for each iteration solves Hamilton’s equations forwards and backwards in time (reverses momentum sign) until the sampler makes a U-Turn (positions get closer together instead of further apart).

See https://

Let’s sample our simple 2D Gaussian model from earlier with the NUTS sampler from NumPyro.

key, _ = jax.random.split(key)

nuts_mcmc = infer.MCMC(infer.NUTS(simple_2d_gaussian), num_warmup=500, num_samples=1000, num_chains=3, chain_method="sequential")

nuts_mcmc.run(key, data)sample: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1500/1500 [00:02<00:00, 738.73it/s, 3 steps of size 7.65e-01. acc. prob=0.91]

sample: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1500/1500 [00:00<00:00, 1520.64it/s, 3 steps of size 5.57e-01. acc. prob=0.94]

sample: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1500/1500 [00:00<00:00, 1838.53it/s, 3 steps of size 8.02e-01. acc. prob=0.88]

gauss_inf_data = az.from_numpyro(nuts_mcmc)

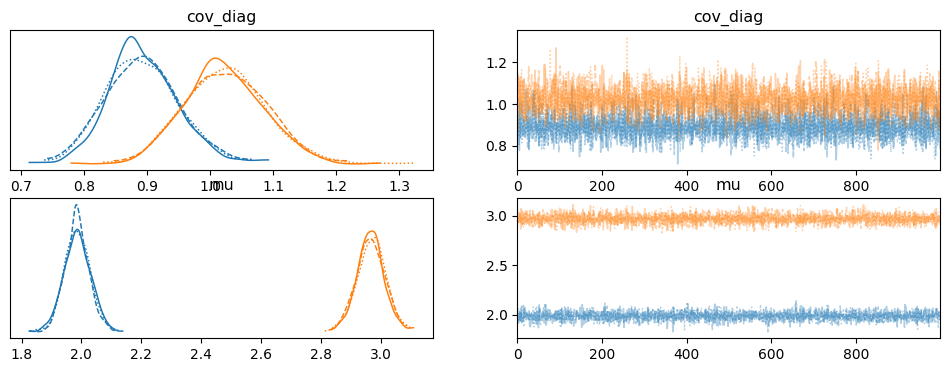

az.summary(gauss_inf_data, var_names="~cov_mat")az.rhat(gauss_inf_data,var_names="~cov_mat")_ = az.plot_trace(gauss_inf_data, var_names="~cov_mat")

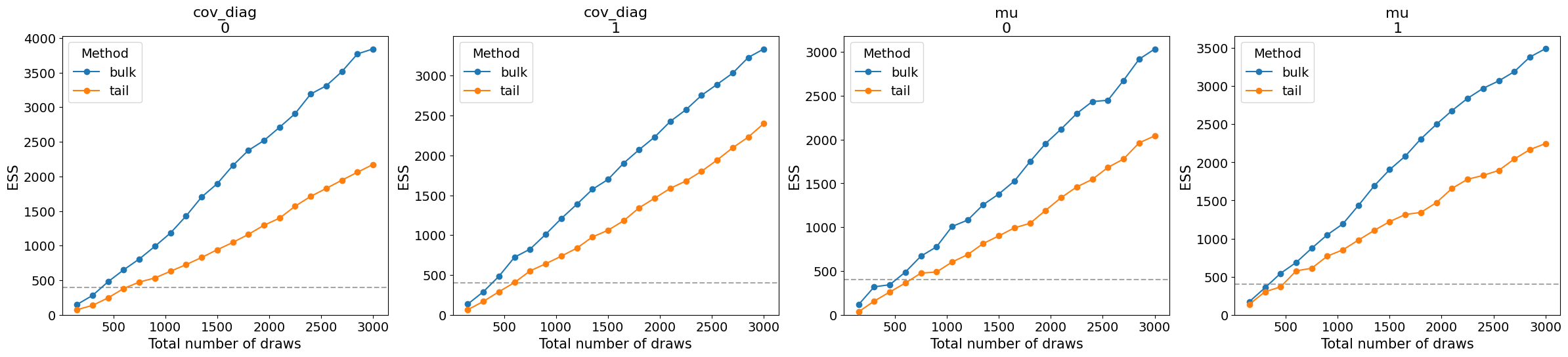

_ = az.plot_ess(gauss_inf_data, var_names="~cov_mat", kind="evolution")

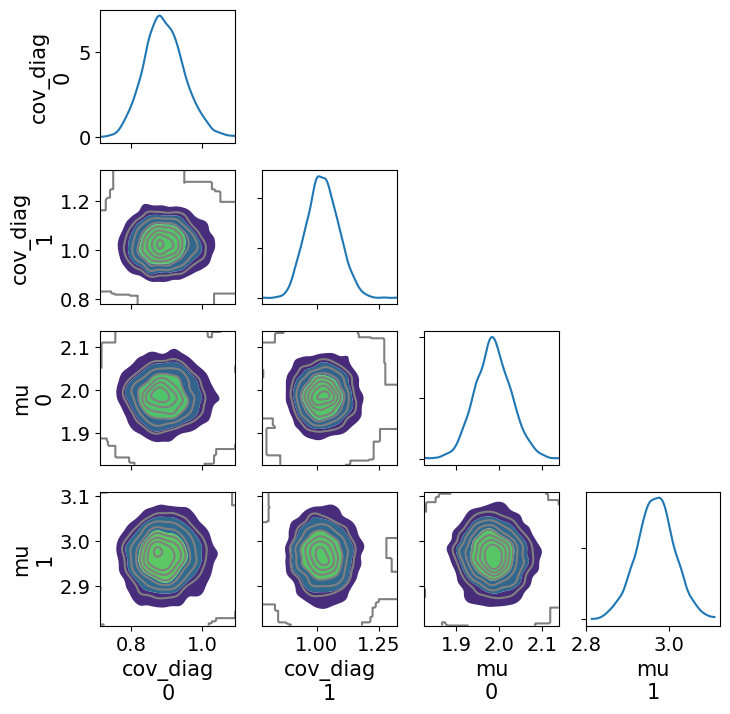

_ = az.plot_pair(gauss_inf_data, var_names="~cov_mat", kind="kde", marginals=True, figsize=(8,8))

Solution to Exercise 4

hz_nuts = infer.MCMC(infer.NUTS(hz_model), num_warmup = 500, num_samples = 1000, num_chains=3)

hz_nuts.run(hz_nuts, df['z'].to_numpy(), df['H_z'].to_numpy(), df['H_z_err'].to_numpy())

hz_inf_data = az.from_numpyro(hz_nuts)Bayesian Model Comparison¶

Now we know how to build models infer their unobserved parameters, but how do we know which model to use for our data? This is where model comparison comes in.

There are many ways to compare Bayesian models and quantify how well each explains your data. Most of these work by finding the Bayes’ factor for one model over another. Remember the Bayesian evidence that we mentioned earlier? The Bayes’ factor for one model over another is the ratio of their evidences.

For models and , the Bayes factor

We can find the evidence either directly, or indirectly. Directly computing the evidence is generally very hard, as mentioned previously. However, there is a class of samplers that can do this: Nested Sampling.

- Nested sampling is essentially an integration algorithm for the evidence with parameter inference as a side-effect. It also isn’t feasible to implement nested sampling in high dimension.

If our models are nested, we can compute the Savage-Dickey density ratio to find the Bayes’ factor.

- For models and with common parameters θ, assume when the parameter subset η that is unique to takes values . Then, the Bayes’ factor is

For indirect methods, I will mention two: product-space sampling and thermodynamic integration.

- Product space sampling works by defining a product-space model: a model that considers at least two submodels and treats model selection as a Bayesian problem.

For example, we could have a latent variable that indexes a list of model likelihoods and selects from them.

- After running our chains, the ratio of the number of samples in one model over another gives us the Bayes’ factor

- Thermodynamic integration is made possible by parallel tempering. We define an integral over the different temperature posterior samples that approximates the Bayesian evidence.

Solution to Exercise 5

from numpyro.contrib.nested_sampling import NestedSampler

key, _ = jax.random.split(key)

ns = NestedSampler(hz_model)

ns.run(key, df['z'].to_numpy(), df['H_z'].to_numpy(), df['H_z_err'].to_numpy())

ns.print_summary() # Evidence will be log Z

def hz_model_w0_wa(z, hz, hz_err):

h0 = numpyro.sample("h0", dist.Uniform(50,100))

w0 = numpyro.sample("w0", dist.Uniform(-2, 0))

wa = numpyro.sample("wa", dist.Uniform(-2, 0))

with numpyro.plate("data", hz.shape[0]):

numpyro.sample("loglike", dist.Normal(hz_forward(z, h0=h0, w0=w0, wa=wa), hz_err), obs=hz)

# Check the model

numpyro.render_model(hz_model_w0_wa, model_args=(df['z'].to_numpy(), df['H_z'].to_numpy(), df['H_z_err'].to_numpy()), render_distributions=True)

key, _ = jax.random.split(key)

ns_w0_wa = NestedSampler(hz_model_w0_wa)

ns_w0_wa.run(key, df['z'].to_numpy(), df['H_z'].to_numpy(), df['H_z_err'].to_numpy())

ns_w0_wa.print_summary() # Evidence will be log ZExpected result is roughly jnp.log10(-249 - -29271) = 4.46, so ! The data you used was actually not . It was generated with .

- Gelman, A., Carlin, J. B., Stern, H. S., Dunson, D. B., Vehtari, A., & Rubin, D. B. (2013). Bayesian Data Analysis (Third). CRC. https://stat.columbia.edu/~gelman/book/

- Hoffman, M. D., & Gelman, A. (2011). The No-U-Turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo. arXiv. 10.48550/ARXIV.1111.4246